Build and Manage Data Pipelines

The Calibo Accelerate platform offers Data Pipeline Studio (DPS) that enables you to create end-to-end no code, low code data pipelines within a few clicks. You can add different stages link Data Source, Data Integration, Data Lake, Data Transformation, Data Quality and Data Visualization to create pipelines according to your usecase. DataOps helps you to automate and manage data pipelines, with statistics and insights for quick action on data pipelines.

The Calibo Accelerate platform empowers users to perform data crawling across diverse databases and servers, enabling them to uncover valuable schema information. With the insights gained from this crawled data, users can effortlessly implement filters and create a customized data catalog, which can then be seamlessly integrated into their data pipelines for enhanced data management and analysis.

DataOps helps you to automate and manage data pipelines, with statistics and insights for quick action on data pipelines. It improves data agility, reduces data-related errors and bottlenecks, and helps derive more value from the data assets. It helps bridge the gap between data teams and business units, ensuring that data is used effectively to drive business insights and innovation.

Currently, DPS supports a wide range of data technologies required in the different stages of a data pipeline. Apart from this, the Calibo Accelerate platform also lets you develop adapters to extend support and integrate newer technologies, according to your organization’s specific requirements.

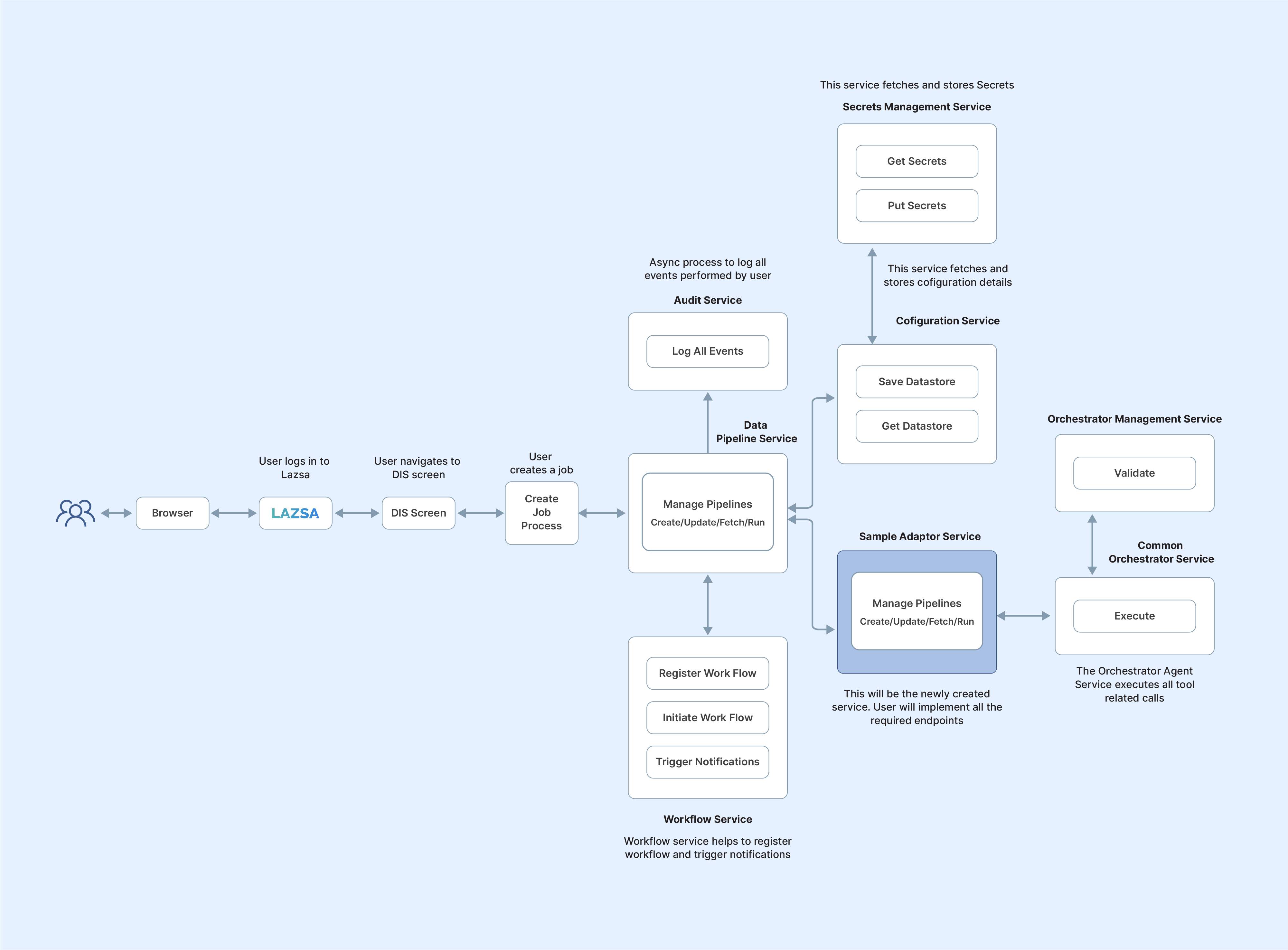

The diagram below shows the flow of various services in an adapter development process.

In a typical data pipeline, the ingestion components provide compute power to process data. The components work with various source and target formats. The integration components must be selected appropriately so that ingestion components can be scaled, based on size of the data. Users can select different mapping, output formats (CVS, Parquet, JSON) and output folders. While developing the adapter, new templates have to be created rather than having a code-based approach. Specific user permissions are required for configuring the technologies like warehouse creation, cluster creation and so on.

Here are the high-level steps for extending the DPS functionality to support newer technologies:

-

Extend UI Widget framework

-

Extend Backend Framework

-

Develop Flyway scripts to define the required update operations in a SQL script or Java code.

-

Develop a Java code to support new technology stack. For example RDBMS adapter.

-

-

Create a Python job template

| What's next? Create Job Templates |